CLIENT

Puppet

SKILLS

UX research, analytics, prototyping, task based usability testing

TOOLING

Optimal Workshop, Calendly, MailChimp, Dovetail, Figma, Axure, Google Sheets, Zoom

Forge module discovery research

Puppet Forge is an open source catalogue of modules created by Puppet and its community. Modules are essentially code that serve as the basic building blocks of Puppet and help ITOps practitioners supercharge and simplify their automation processes.

The problem

The Forge contains over 6000 modules and due to its open source nature, the quality of these can vary greatly. Each module has its own details page that contains vast array of complex information and details of how to download, install and deploy the module. Often, there are multiple modules solving identical use cases and for the user, the choice can be overwhelming and time consuming.

Desired outcome

How can we improve the module discovery process so that users can easily find, choose and install the module that best suits their needs?

Stage 1 - Analyse

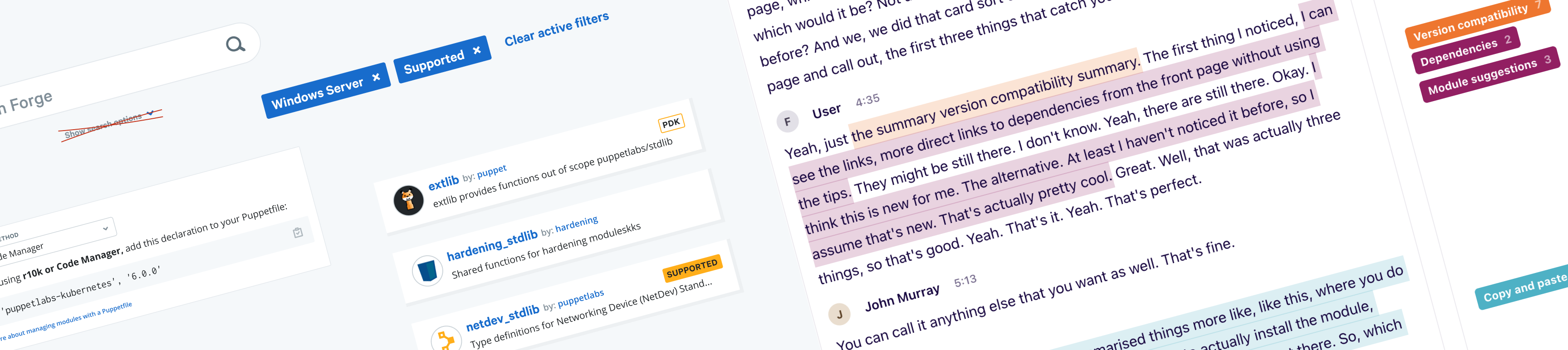

To understand the problems users might have with module discoverability, we first went through Google Analytics, focusing on search and filters.

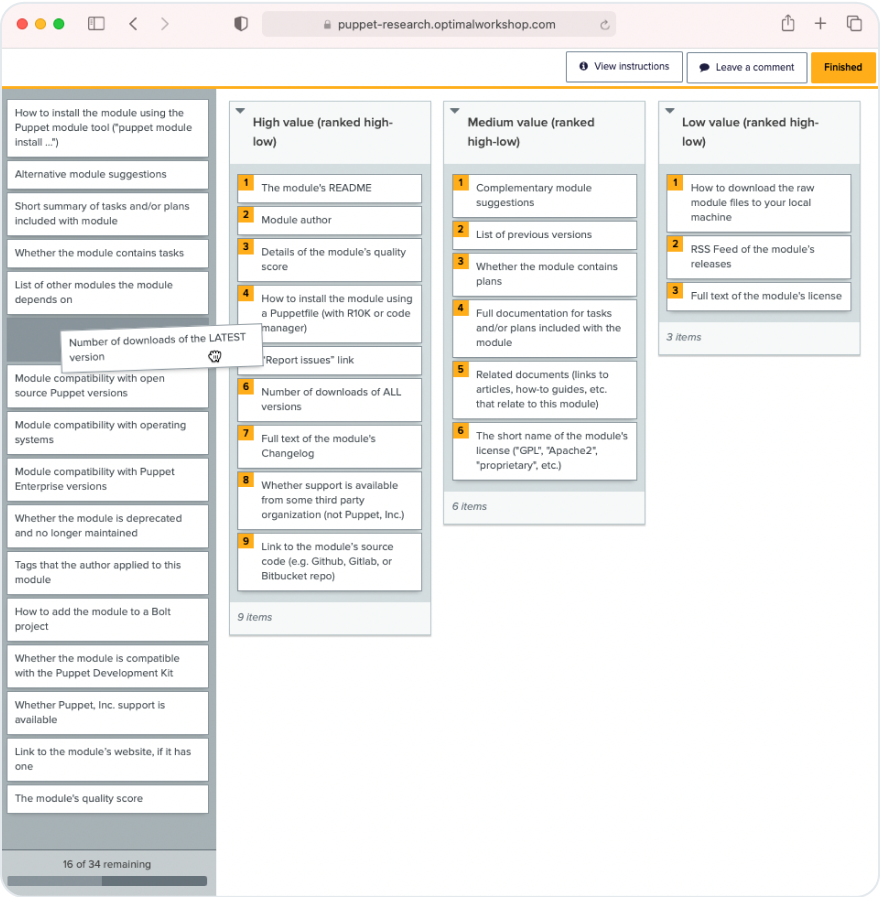

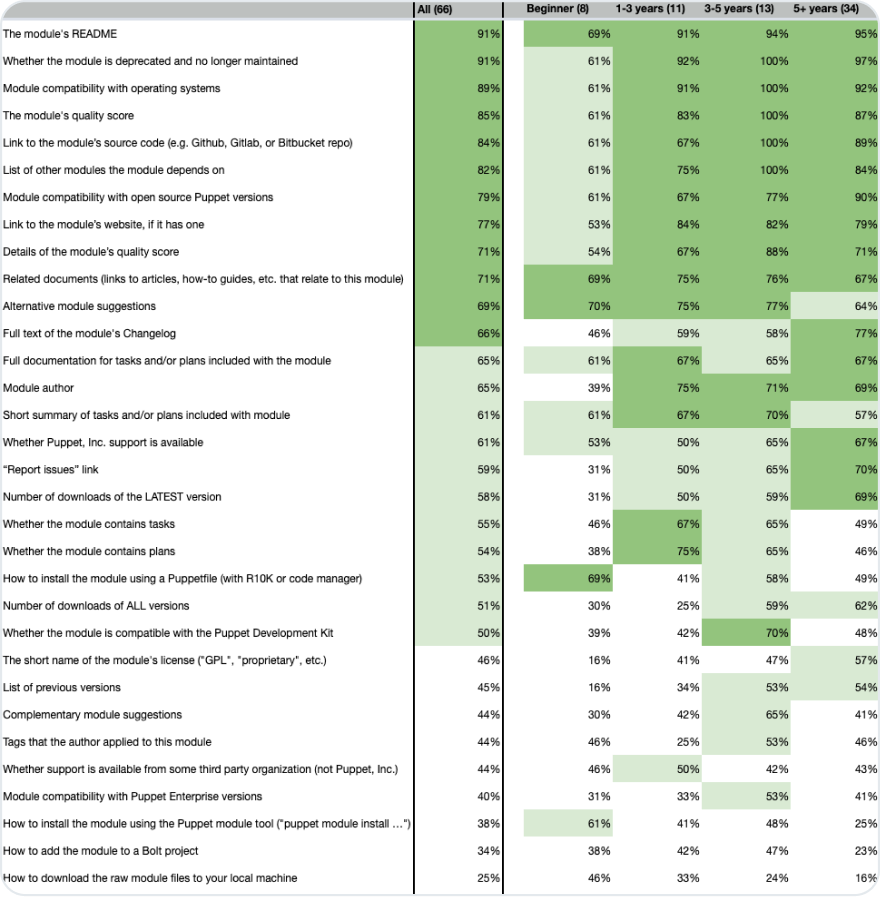

Next, we wanted to understand what content was important to Forge users. Using an intercept snippet on the Forge module detail pages, we invited users to take part in a self-driven card sorting exercise. Users were given a list of cards describing content from the module detail page which they had to sort into columns based on whether they saw them as high, medium or low value.

The response to this exercise was great with 103 users completing the study and 80% of users opting in to be part of future research.

An accompanying questionnaire allowed us to segment the users by experience level and ensured any improvements we would make would have a broad benefit.

Looking at the features that were either ranked as high or medium value by users, we were able to build a prioritised list broken into definite focus areas (where over 66% of users ranked them as high or medium value) and areas worth consideration (where over 50% of users ranked them as high or medium value).

Stage 2 - Improve

Based on the analysis, we came up with some solutions that we felt would help us improve with module discovery. We mocked these up and put them into interactive prototypes to allow us to test them with users.

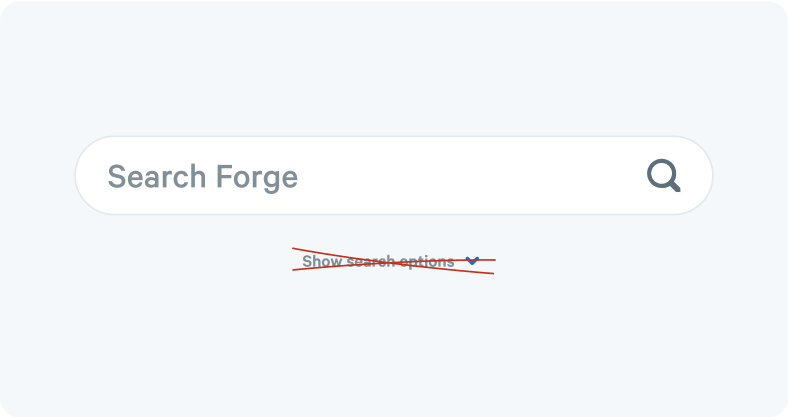

Removal of search options from search bar

Why? The data showed us this was hardly used and users overwhelmingly prefer filtering on the search results page.

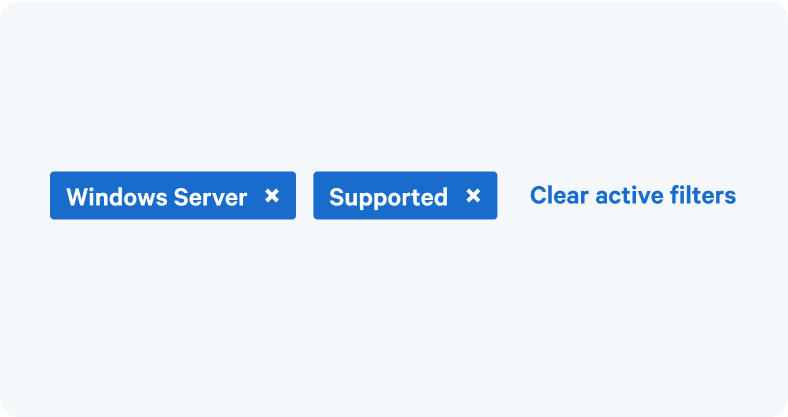

Introduction of visible persistent filters on search results page

Why? Filter pills didn’t currently exist so it made sense to add these. Users are quite often applying the same filters so we thought the idea of making them persist for new searches might be useful.

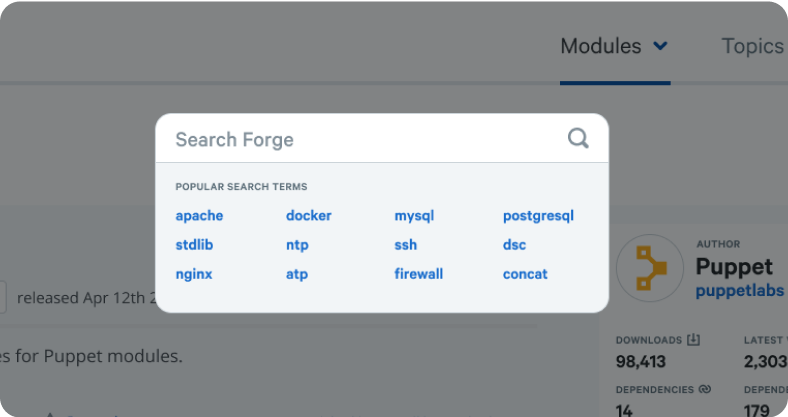

Introduction of search modal with popular search terms

Why? Autocomplete search would have been our preferred option but this wasn’t feasible in the short term. As a stop gap we wondered if showing the top 10 search terms might provide value to users.

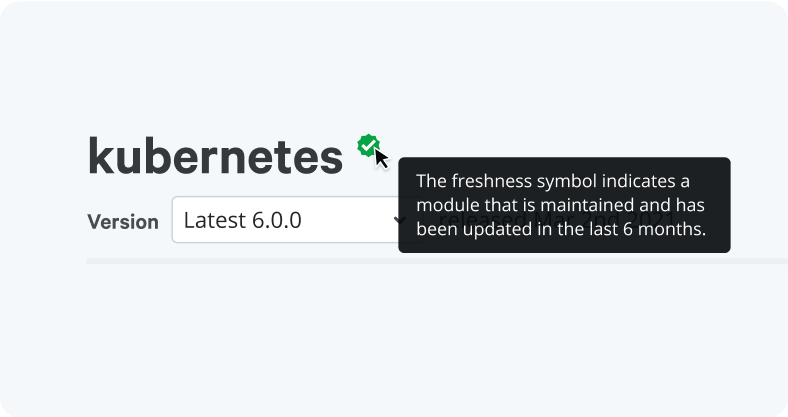

Addition of a freshness symbol

Why? A module that is maintained and frequently updated by multiple users will normally be of higher quality. That data is not immediately available to users so we wanted to see if encapsulating it in a badge would be well received.

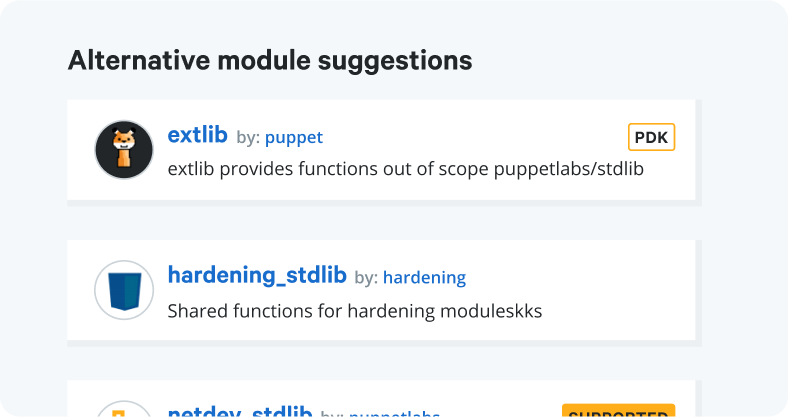

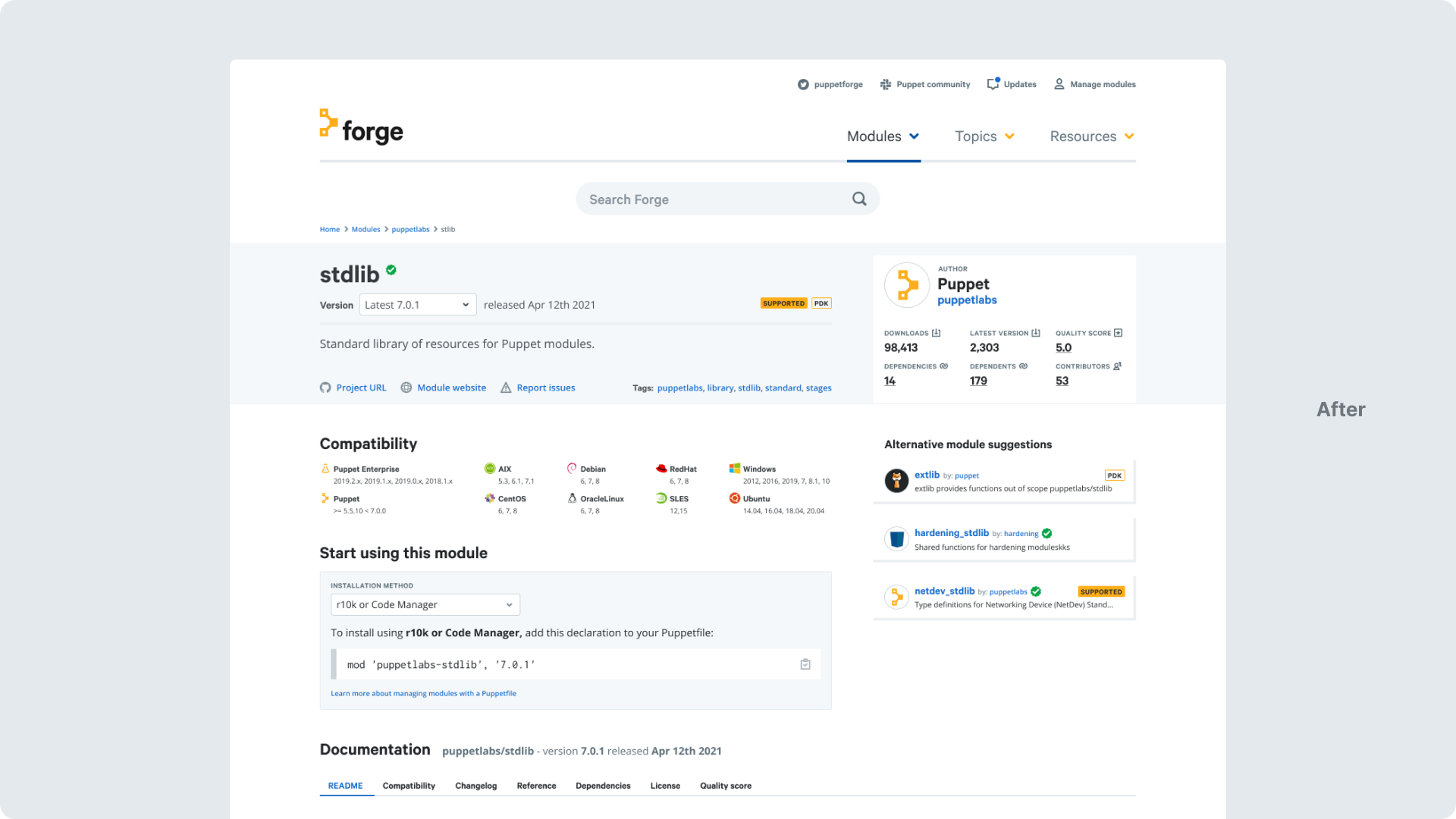

Alternative module suggestions on module detail page

Why? So that users could quickly navigate to suitable alternatives without having to jump back into search.

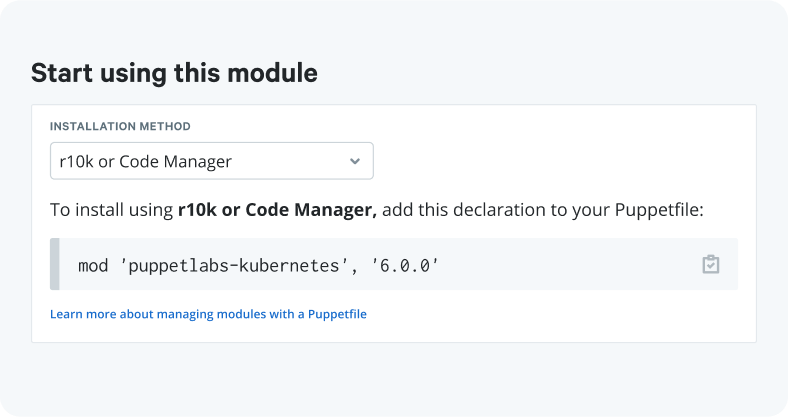

Replacing installation accordion with a dropdown

Why? R10K is the recommended installation method and most popular so it doesn’t make sense to make the others methods so prominent. Doing this also frees up a significant amount of vertical space.

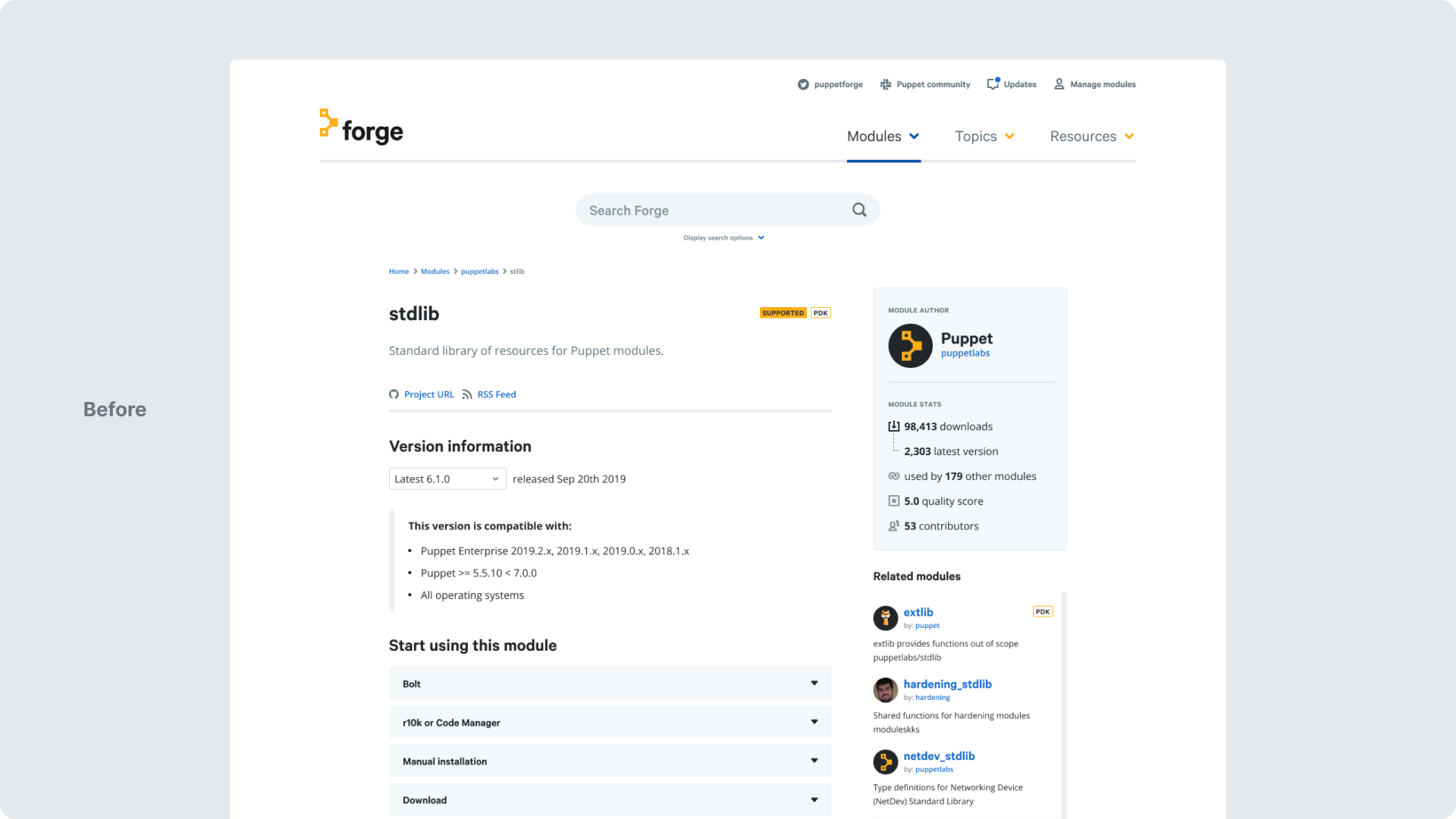

Improvements to the module detail page

In addition, using the card sort data, we redesigned the module detail page to improve the information hierarchy and make better use of the available space.

Stage 3 - Validate

In order to validate our improvements, we wanted to get answers to these core questions:

- What do users think of a freshness symbol?

- Do the changes to the module detail page improve the user experience?

- What do users think about the dropdown installation option?

- Do users trust Forge search to help them find the module they want?

- Does popular search terms in the search dialogue add value?

- Does removing ‘search options’ cause any frustration?

- Is the concept of filters that persist on search of value to users?

We carried 6 user research sessions over Zoom with candidates spread across the globe. Interviews were scripted with a series of set questions, a screen review of the new module detail page and a task based usability test where the users took control of the screen and interacted with the prototype.

Stage 4 - Summarise

The key themes to emerge from the research were:

- The freshness symbol was positively received.

- The changes to the module detail page were well received as improvements in the right direction but were seen as evolutionary rather than revolutionary.

- The dropdown installation option was more preferred than the previous accordion.

- Users mostly trust that search will bring them to their desired results even if sometimes it returns irrelevant results.

- There were mixed opinions on the popular search terms but nobody hated it.

- Removing search options from the search box wasn’t problematic.

- Persisting filters were seen as adding value by most users but for those who didn’t think so, their introduction wasn’t seen as a blocker.

In summary, the majority of changes were positively received and could go into production. We learnt that clearer information on the persistent filters on first view would be useful and popular search terms could be dropped as it didn’t add much value. The research also highlighted some further suggestions for the future such as Quality Score information being reintroduced, users’ preferences being remembered and exploring ways that we could improve user-generated documentation quality.

Get in touch

Phone

© John Murray 2024